How do I use LLMs to write stories?

Well, I don’t. At least, I don’t use them to write a story wholesale, or the plot, or the characters, or really any other meaningful element of a narrative. It turns out that language models, at least today, are really quite bad at telling stories. Besides, writing stories is fun – I don’t want a robot to do it for me.

Why they are so bad at telling stories is an interesting question, but if you’re a practical kind of person, you may be more interested in what they’re actually good at.

Ok, so what are they good at?

Language models, whether GPT or Claude or any of the others, are a tool. In the hands of a skilled writer, it’s likely that GPT isn’t going to write any better than you are. It could be a very expensive thesaurus or a quick way to rephrase something when your brain dies for the day, but it probably won’t improve on your writing in a significant way. In fact, it might make it worse! Trust me, I’ve read a lot of papers.

For me, these models provide a kind of interactive whiteboard. I’d prefer to go to the pub and have a beer with a colleague, but most of the time that’s not possible. Maybe I don’t have a colleague or a pub nearby, maybe it’s the wee hours of the night, maybe I just can’t handle getting dressed today. Whatever the reason, these models can serve as a conversational partner to think with when the better option (a human) is unavailable.

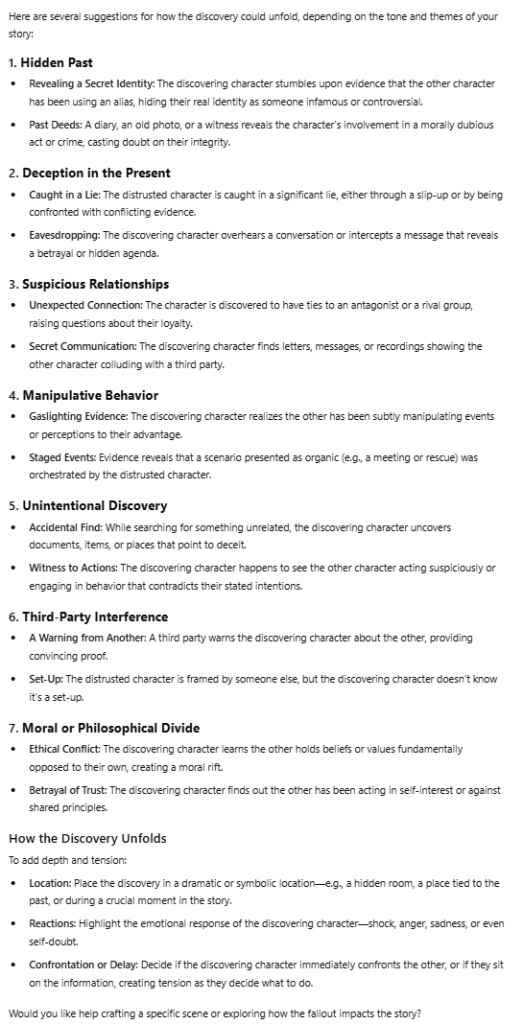

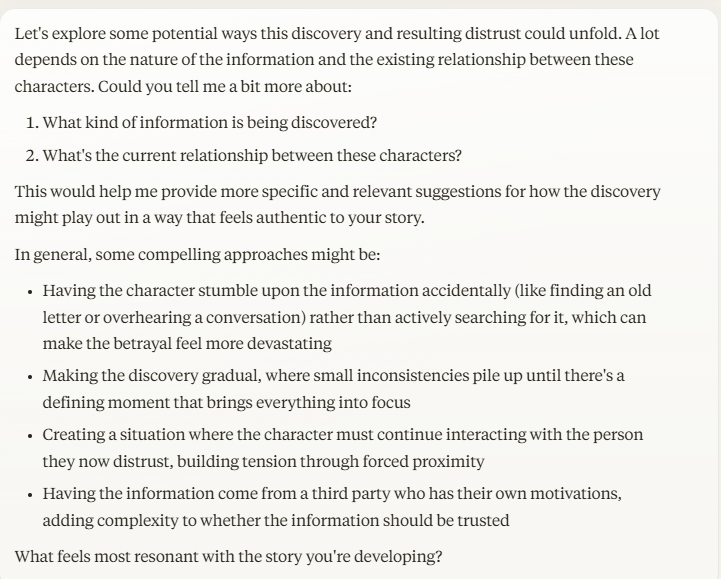

I prefer to use Claude for this. Currently, its 3.5 sonnet is arguably the best model for language tasks, and it’s trained to be more of a conversational partner than GPT is. You’ll notice the difference when you interact between them. GPT tends to be more generic and less “thoughtful” with its questions, sometimes even sycophantic. I’ve placed a side-by-side comparison below that you can review.

Prompt: I’m working on an interactive story, and I’m struggling to resolve a point where one character discovers information about another character, causing them to distrust the other. What are some possible suggestions for how this discovery could play out?

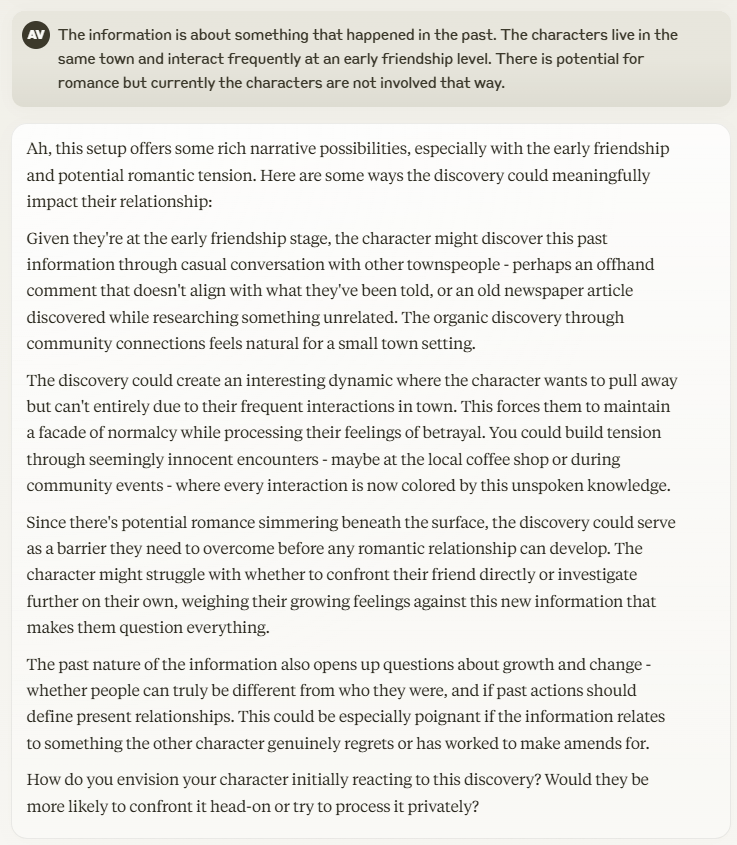

I tend to start with a question concerning whatever I’m stuck on, giving it only a very brief and vague context. I don’t find it helpful to over-engineer prompts when I’m using it as a whiteboard, and I generally avoid sharing unique or private information (as one should). Starting vague allows me to drill down into more specifics depending on where the conversation leads, more like the way I would interact with a friend or colleague. Perhaps it’s just the way I prefer to think (I tend to be more of a meta person than very detail-oriented), so you should experiment for yourself.

Neither of the responses above have groundbreaking ideas in them. In fact, if you’ve read (or played/watched/listened to) stories much, you could likely come up with all of these on your own pretty easily. GPT and its like are not magical in any way. They’ve ingested some large but very incomplete amount of human-generated content, and have been trained to analyze, mimic, and generate contextually similar content.

Here’s an excerpt from an excellent paper contrasting the difference between GPT’s contextual narrative and those of an expert writer. As you can see, the human ability to surprise and create variation is far beyond what these models currently manage.

This is one of the reasons I prefer to use Claude as a thinking partner more than a writing partner. In the conversation below (which I constructed purely for this post), I didn’t start out wanting to theorize character emotion, but I ended up there, and this unpredictability is core to how I find new ideas.

My own questions of how to think strategically about emotional mixture reflect the way I think, and this model is able to prompt me with some ideas that may not be groundbreaking, but allow me to push myself and my own thinking further.

When it comes to actually writing this scene though? That’s going to be just me.